Doron Rasis

Doron Rasis

about | links | blog

make a computer vision-based video game that doesn't feel janky with this one weird trick

(10-13-2019)

TLDR: the trick is to conceal your camera in a box.

why build an alt control game?

My friend Mary and I spent much of this year building Toot the Turtle Takes the Toilet Tunnel. Toot is a 7.5-foot-tall arcade game, where players control the fate of an abandoned pet turtle by pulling on real-life ropes. Usually when I say something like that to explain what Toot is, I get a blank stare until I whip out my phone and show pictures/videos of the game:

It’s an endless runner with really simple mechanics: navigate the sewer, avoid monsters, collect treats. We brainstormed and playtested other mechanics that added complexity to the game, but decided that because our controller was new and unintuative, we wanted to keep the game itself familiar and easy-to-understand. I’m really happy with how Toot turned out. It was rewarding to watch children enjoying the game, couples playing it in co-op mode (one partner per rope), and folks in line taking pictures and getting excited for their turns.

Toot falls in a genre of computer games called “alt-control” games, whose defining feature is that they don’t use traditional controllers (like buttons, touchscreens, joysticks, kinnects, etc). Alt-control games, almost by definition, seldom make anyone money, and—in my experience—are more difficult to come up with and build than normal video games. Then what good reason is there to build one? Great question, reader! Besides wanting to LARP as a Chuck E. Cheese or Dave & Buster’s bigwig, I can think of two good reasons:

- Even if you’re working with inexpensive off-the-shelf components from the Home Depot or Adafruit or Canal Plastics Center, what you build could still be at the cutting edge of Human–computer interaction since you’re creating a novel way to interface with a computer.

- They’re fun and special. Or, as our professor wrote in a really nice email to me and Mary: “At their best, alt-control games are more than the sum of their parts, fusing industrial design with game design and social engineering to produce an experience that cannot exist in a normal videogame.”

why use computer vision?

When we started thinking about this game in January, we knew for sure that we were gonna build an alt-control game. We were looking at cool scarves and old electro-mechanical arcade games for inspiration (Toot is directly inspired by Taito’s Ice Cold Beer). We also knew that we were really not interested in using the tools associated with most alt-control projects. Mary and I met in a student research group working on a wearable tech project, where we bonded over our shared hatred of soldering, holding wires, knowing what a resistor is, doing volts, touching amps, connecting stuff to GPIO pins, etc. I’m not interested in hearing the word breadboard unless it relates to food preparation (for me, ideally).

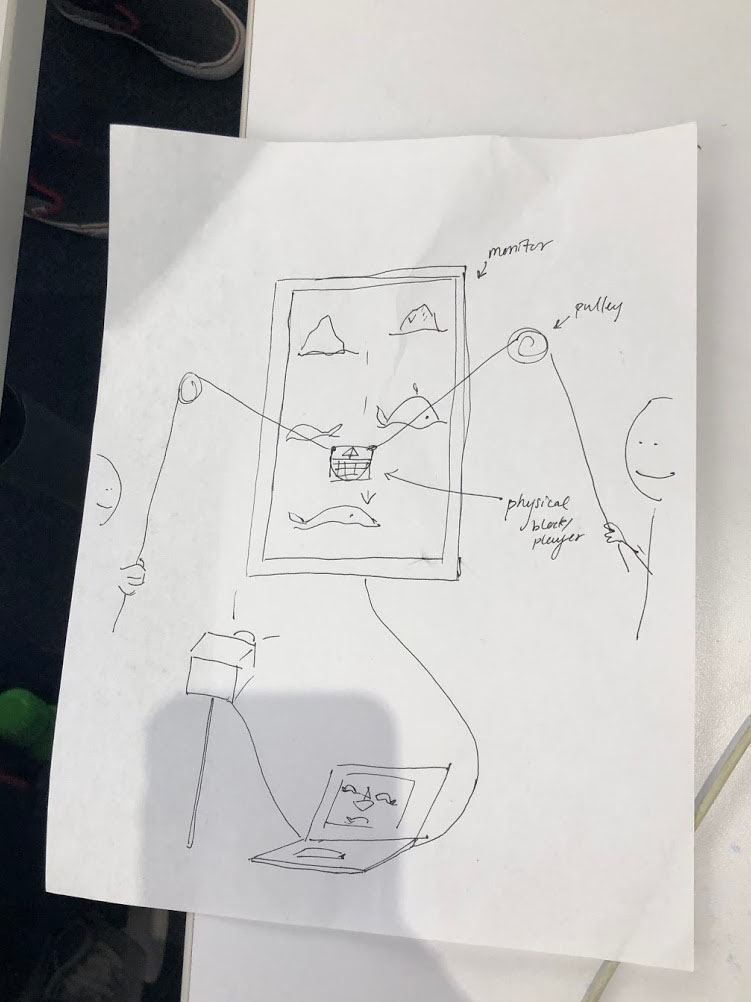

Computer vision seemed like a natural option—it’s nice to reduce the number of significant hardware peripherals in a project to one USB webcam, and write code that does all the heavy lifting. This was an early conception of the game (not too far off from the final product):

some image processing stuff if you care

This is not a comprehensive primer on realtime object tracking, and glosses over a lot of failed trials and iteration. Some stuff you might find helpful, though: this post about tracking lightbulbs for a videobooth, this collection of browser-based computer vision examples, this series of videos on computer vision, this post about the HSV colorspace, and this tutorial on object tracking using color. I give you permission to skip this section if you think it’s boring.

We decided to do the image processing using openCV, a monolithic and well-documented computer vision library with bindings in your favorite programming language. Unity (Mary’s tool of choice, which I don’t like for… reasons) uses the Mono runtime. The openCV bindings available for C# used a dated version of the static openCV build that doesn’t take advantage of the computer’s GPU. We ended up using the Python binding for openCV, and sending the turtle’s coordinates to our game over a UDP socket. I think a protocol like OSC might have been less latent, but we were happy with the performance. Here’s a rough early prototype of the tracking using just openCV’s windowing system:

We use a range of HSV colors value to find Toot in the video input, and an openCV function called findHomography to find a matrix that represents a transformation from camera-coordinates to screen-coordinates.

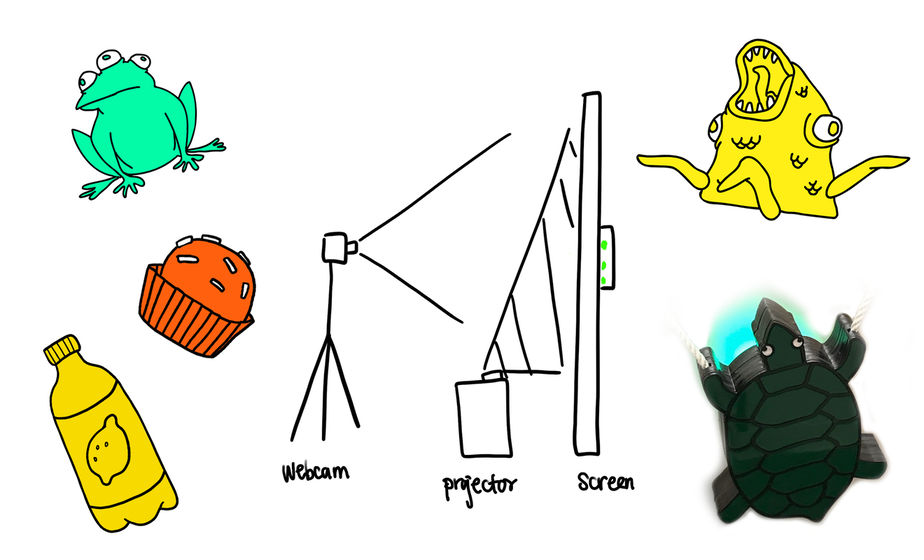

The shadow our short-throw projector cast over the player object was huge, so we decided to buy an inexpensive screen online and build a proscenium around it. This way, we could rear-project the game and track our turtle from a camera behind the screen by putting bright green LEDs inside of him:

While not visible to humans, the image cast by a short-throw projector is brighter on the bottom than the top. This messed with our tracking significantly. We got around this by programatically finding where on the screen the projector was shining brightest, and using a different mask of HSV values on that part of the screen.

Whenever a volunteer at Babycastles wanted to re-calculate the homography matrix because the cabinet moved or the camera was knocked out of place, they could open the side of the cabinet (which was secretly a hinged door) and use a GUI to click on four markers with a mouse.

cameras and computers and games

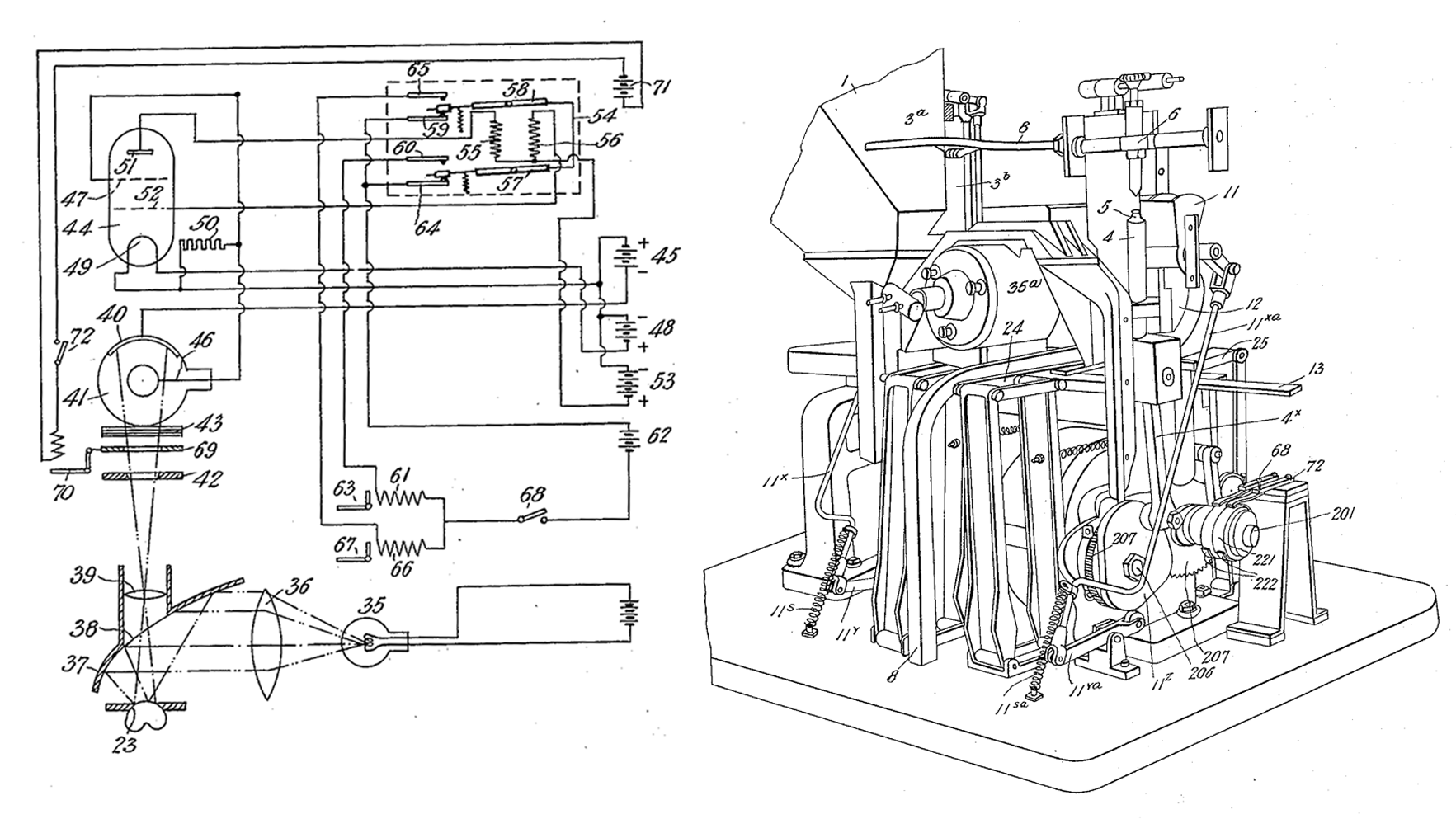

The oldest example I found of computer vision online was an optical sorting device described in a 1932 patent called “DEVICE FOR MECHANICALLY SORTING NATURAL AND ARTIFICIAL PRODUCTS ACCORDING TO THEIR COLOR AND GRADATIONS OF COLOR”. The patent protected the right of Arthur Weigl to make and sell a contraption that inspected and sorted coffee beans and other grains based on color:

This device (its patent claims) was the only economically feasible and working apparatus of its kind, because it substituted selenium cells with photo-electric cells.

All this is to say that… computer vision existed before microprocessors. Before The EINAC. Before Turing machines. And it worked robustly. I remember taking a tour of the Herr’s potato chip factory as an elementary-schooler in the early aughts and being unimpressed by computers telling robots to discard ugly potato chips. Making sense of the pixels in a 2d array seems like a trivial problem for computers. It’s one that’s been solved by a lot of really smart computer scientists—powerful software libraries enable programmers to take advantage of algorithms that resulted from years of research in computational geometry and statistics.

Then why do the gesture-based controls of computer vision-based games feel so wonky? Like the technology underlying them isn’t quite ready for prime time. I’m no “video” “game” “critic”, but can say with some authority (as a former Playstation EyeToy owner) that wildly waving your hands in front of a camera until a television responds to you is not a “fun” “game” “mechanic”. Facebook’s videochat games are boring (“uhhhh… what if… your nose was a… computer mouse?”). Even when other input devices—like infrared cameras (Wiimote) or depth cameras (Kinect)—enhance the abilities of webcams to create intuitive nearly touchless interfaces, it can feel like players are using esentially a giant and horrible multitouch display.

So what’s a developer committed to fun and good times and having a blast to do? Add a layer of physical objects between the camera and the player! The reason computer vision works well in manufacturing is because the camera and the objects it is detecting live within a contained environment, where lighting and other variables are controlled. What if players didn’t need to be aware of a camera to use it as an input device?

Toot would have felt a lot less magical if the webcam tracking our protagonist didn’t live inside of our cabinet. Players would need to be careful not to interfere with the camera’s line-of-sight, and the game itself would be significantly more sensitive to outside lighting conditions.

Our game (and systems like it) only scratch the surface of how hidden video capture devices can be used to create novel games. Games that use webcams hidden in boxes with opaque surfaces, open slots, levers/springy buttons/wheels with markers on their hidden sides, or that don’t even use video as an output device are the most promising for computer vision-based gaming.

Cheap webcams can be powerful, but when money gets dumped into using them as input devices for no reason, they don’t create compelling experiences. If you’ve got the time and interest, go ahead and use them to make something you think is fun!